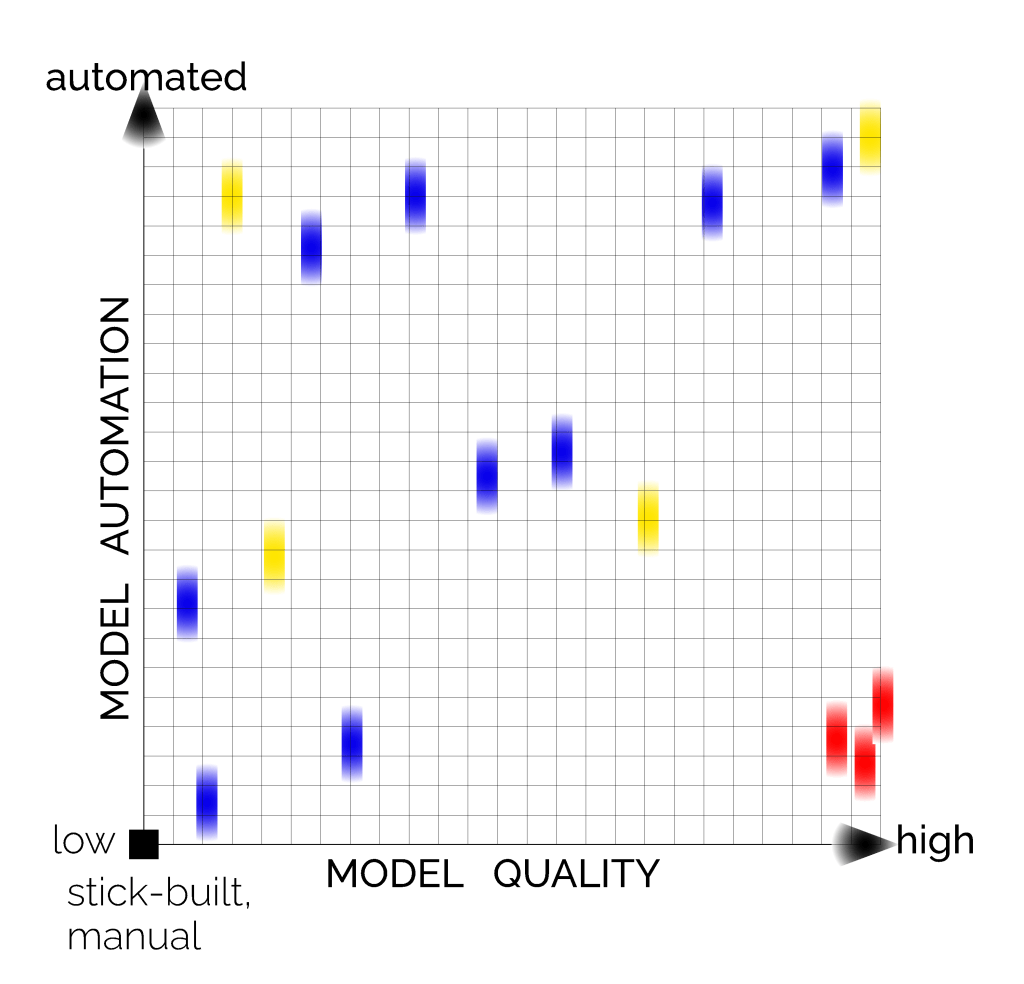

I invite you to think about your own AEC projects and place them on this graph.

The Y axis is degree of model creation automation, from stick-built by hand at the bottom, to fully automated (by any means) at the top.

The X axis is model quality from poor on the left to high quality on the right.

Make your own judgements about quality and automation in your own project models, and place your conclusions on the graph:

Position in a quality and automation field is debatable for any single project. Where should it sit on the graph? But for a large enough set of AEC project models from any firm, or an aggregate of projects from many firms, distribution would cover the whole graph.

Correlation?

Some attempts at graphing this will likely show some correlation between model quality and degree of automation. Correlation is more likely, I’d hypothesize, if the automaton is fine-tuned enough. But of course the more finely tuned the automation, the more likely it is to drive a project into narrowly defined outcomes that may or may not be suitable, for various reasons. Typically we compromise, on the Y axis somewhere between the extremes of total automation and totally ‘hand built’ digital models.

It’s definitely not my point to argue against model automation. Far from it. And I don’t argue against model quality either. That’s for sure. I just point out that if you plot a hundred projects on this graph, there will be little white space left, the whole graph will be covered. That’s important for reasons that include, for example:

- How will anyone know, for a highly automated model, and alike for a manually built model, where the model sits on the quality axis?

- Why would anyone (downstream from the authors) care about the degree of model creation automation, once some useful determination of model quality is made?

- How is it that quality determination is made? From where comes any lucidity at all about it?

On the graph, the ones at the bottom right (red) are a few of the projects I worked on from 1998-2008. 25 years ago I built digital models of buildings that went pretty far to the right on the quality axis, while the model creation automation was pretty low. They were stick-built by hand mostly, apart from the very basic built-in so-called, at the time, ‘parametrics’.

Here’s an example, a renovation project converting an abandoned building into an Irish Pub near my hometown in Lexington, Kentucky. We called it the ‘iPub’.

Below, you can see a model I built in 2007 and the drawings that were harvested complete from that model. You can click on the caption under the drawing images and view each automated drawing close up. I did projects this way since 1999. That’s a lot of modeling. But that’s not really the real story that matters. What matters is how essential those drawings were — that is, LOOKING AT THEM — in order to successfully complete the building of the model, and to successfully convey the adequacy and meaning, more than superficially, of the model to others.

That story can and should evolve, with New Equipment for Looking, which I propose here https://tangerinefocus.com

That model was adequate, high enough quality to automate all (very near 100%) of the non-annotation graphics on all the construction drawing sheets. This was in 2007 and I’d done the same before on other projects already in 1999:

And so what?

Where your project sits, anywhere on a quality/automation graph, at very high automation or very low, at very high quality or very low, has no impact at all on your need for (I’ll introduce a phrase) equipment for visual close study (VCS) of the model. Traditionally that equipment takes the form of a set of technical drawings like those above. It’s just equipment for making clear, what need be made clear, to yourself and others. It’s equipment that says:

Hey, look over here at this. I’m drawing your attention here so you’ll understand and won’t overlook certain things that matter. Also, I’m making explicit specific non-generic affirmations about the quality and suitability of what’s visible where I’m drawing your narrowed attentive focus, where I supply what’s needed for visual close study.

What’s described above holds true equally:

- whether models are mental and physical only (take a second to reflect on that), as they were before modern computing and software, or

- whether they’re mental, physical, and digital as is typical today.

And yet the visual FORM of this equipment for visual close study (VCS) remains trapped in the same form it embodied centuries ago, even as we’re now well into a 4th decade of digital modeling.

Fragments

Sure, there are any number of fragmentary partial steps at VCS equipment in countless modeling softwares today. But there is no unifying commonality that makes the equipment broadly recognizable or even discoverable, let alone portable between modelers. And even where attempt is made at that, the equipment remains greatly underpowered, the articulations not up to the task of VCS.

The industry has been pre-occupied first with the introduction of modeling and subsequently with improving model automation. VCS is basically forgotten and abandoned. It’s development is ignored, let alone underfunded.

This has to change. For modeling’s sake. It’s counterproductive to leave VCS stagnant.

This truth is emphasized again with every progressive step in model automation.

Technical drawing expresses ‘human in the loop’ engagement with MENTAL models. Upgrade to this engagement is required for digital models. This is re-emphasized again by the need for ‘control rods’ in AI-generated models.

Take a look at my updated proposal for the future of technical drawing. The proposal website is here: https://tangerinefocus.com

It’s an all new website that’s concise. There are 2 pages, one about the proposal and one about me. There’s also a blog with commentary.

The idea of the development proposal is:

Equipment for visual close study (VCS), in models of all kinds.

It’s a continuation, conceptually, of my earlier work on the automatic FUSION (v1.0) of drawings in-situ within models (which now exists in at least 9 softwares after my team developed and commercialized it first in 2012).

The new proposal is not yet developed by anyone. But it is intended to be OPEN, standardizable to support portability across different modeling apps, and to honor and respect the rich legacy of centuries of technical drawing.

The proposed development UPGRADES technical drawings into a much richer FORM of articulate visual engagement there, in the model, than was the case with the v1.0 fusions. The upgrade empowers everyone to more readily make full use of the purpose of VISUAL CLOSE STUDY: purposeful engagement with complex information environments, the means at hand for making what need be clear, clear to self and others, and through that, improving interpretation and taking onboard adequate understanding supporting meaningful action in all AEC phases: design, construction, operations.

The proposed automated fusion and upgrade in FORM, of articulated visual close study (otherwise/formerly known as ‘technical drawing’) is non-destructive; it’s completely reversible, automatically — the logic is straightforward.

Two forms of expression, new and old, of equipment for VISUAL CLOSE STUDY: technical drawing and its proposed upgrade, TGN.

What’s proposed, really — see the description here of the 8 features of the VCS/TGN proposal: https://tangerinefocus.com/visual-engagement-with-modeled-worlds/ — is evolution spurred by drawing’s existence now in-situ within digital models, inspired, by the way, by the same instantiation, by mental exercise only, of conventional drawing within mental models. It’s what we all do anyway, but now richly assisted by digital media and the software producing it.

I’m available to help any dev team that wants my assistance designing their implementation of equipment for visual close study (VCS) in models of all kinds. My opinion is that implementations will be better if you bring me in to participate. But, yeah, just my opinion.

My earlier work, now leapfrogged by the VCS/TGN proposal, is here: https://tangerinefocus.com/tgn/earlier-media-innovations/

It exists in 9 softwares now since I did it first in 2012.

So, I’ve done this before and I believe I can do it again.

Because better equipment for visual close study within models is so very much needed, by everyone, and so under-addressed by software development to date.

I’ll keep trying until this happens.

WHAT ABOUT AI?

You say,

What about AI, and AI-generated models?

They’re still models, aren’t they?

We still have to perceive, see, engage, think about, develop, interpret, understand, evaluate, improve, use, and exist in the models.

‘TGN’ equipment within digital models — the expression of what ‘technical drawing’ would look like if it were invented today instead of long ago when models were mental and physical but not digital — are an optimal host for ‘control rods’.

CONTROL RODS

“Control Rods” for human-in-the-loop input, back into AI and other ‘generative’ model creation systems, for human guidance, for the laying down of control parameters, control markers, control drivers, within models, for feedback back into model-generating systems — you understand the idea? — these controls are optimally hosted within TGN rigs, within models.

You can see why, right? It’s not hard to imagine the development of a variety of controls, putting the ‘human in the loop’ ‘in ways made easily visible, accessible, close at hand and intelligible, within TGN visual close study (VCS) equipment in AI-generated models, and models generated by any other means (computational algorithmic and stick-built by humans tediously).

Let your imagination loose. What control rods would you embed, to guide (AI or NI) generative development of complex models?

Control rods, as with nuclear power, …the power generation can run out of control in the plant, with negative consequences, hence the control rods:

AI-generated architectural and engineering models likewise have to be kept under control.

- Yes, there are directed graph controls possible, connectable.

- And there is prompt engineering.

But more work is needed here, and control through equipment for visual close study (VCS) can only be useful.

Here’s Julia Child’s kitchen, by the way. The article by Gary Marcus and Ernest Davis is only about failed Q and A with an LLM against an image, but you can extrapolate the general conditions of these things, to their use in generating AEC models of physical infrastructure. They tend to run out of control; we need reliable mechanisms for keeping them under control; not too controlled; they can run wild, serendipitous accidents are welcome, but reigned in as required:

I’ll help you build VCS equipment in YOUR modeling apps.

My “knock on doors, at software companies, with a development proposal” strategy worked before: https://tangerinefocus.com/tgn/earlier-media-innovations/. I think it can work again. I’m knocking right now; do you hear me knockin?

I can help you build VCS equipment in YOUR modeler, call me.

I’ve been knocking a long time though. Remember this?:

Likewise, does anyone but me want the future of technical drawing developed as an open standard form of engagement with digital models? Some thoughts on that here:

What about starting a new company?

The market is huge. AutoCAD for example, a technical drawing software, remains ±60% of Autodesk corporate revenue in AEC, still today after 30+ years of modeling apps.

There is enormous terrain out there ahead for the future of technical drawing. Plenty of room for many companies, existing and new starts.

I can build this. No doubt about it. Just need a funder.

Here’s another song. Put some energy into it (whatever it is). That’s my advice: