Among the many problems created by AEC (architecture, engineering, and construction) software of the last 2 decades, the decay of visual clarity stings most for me. That’s coming from my start on paper in school, then autocad and microstation drafting at architecture firms in the ‘90s, to digital modeling with fervent devotion by ‘98, and so on, the usual story. What we thought we were moving toward with software, and where we’ve ended up… are very different. I gather some popular industry sentiment here:

I intend to contribute to visual clarity again. I propose open source development of a set of features articulating visual close study (VCS), features that act in concert as equipment for looking, for implementation in all AEC modeling (or model-handling) applications whose developers want their users to have it.

Really I’m only proposing to do in software what everyone in this industry does already, necessarily as a core primary perceptual activity >> a visualized INTERPLAY between the wider expansive whole of a complex environment, and an array of articulations of visual close study (VCS).

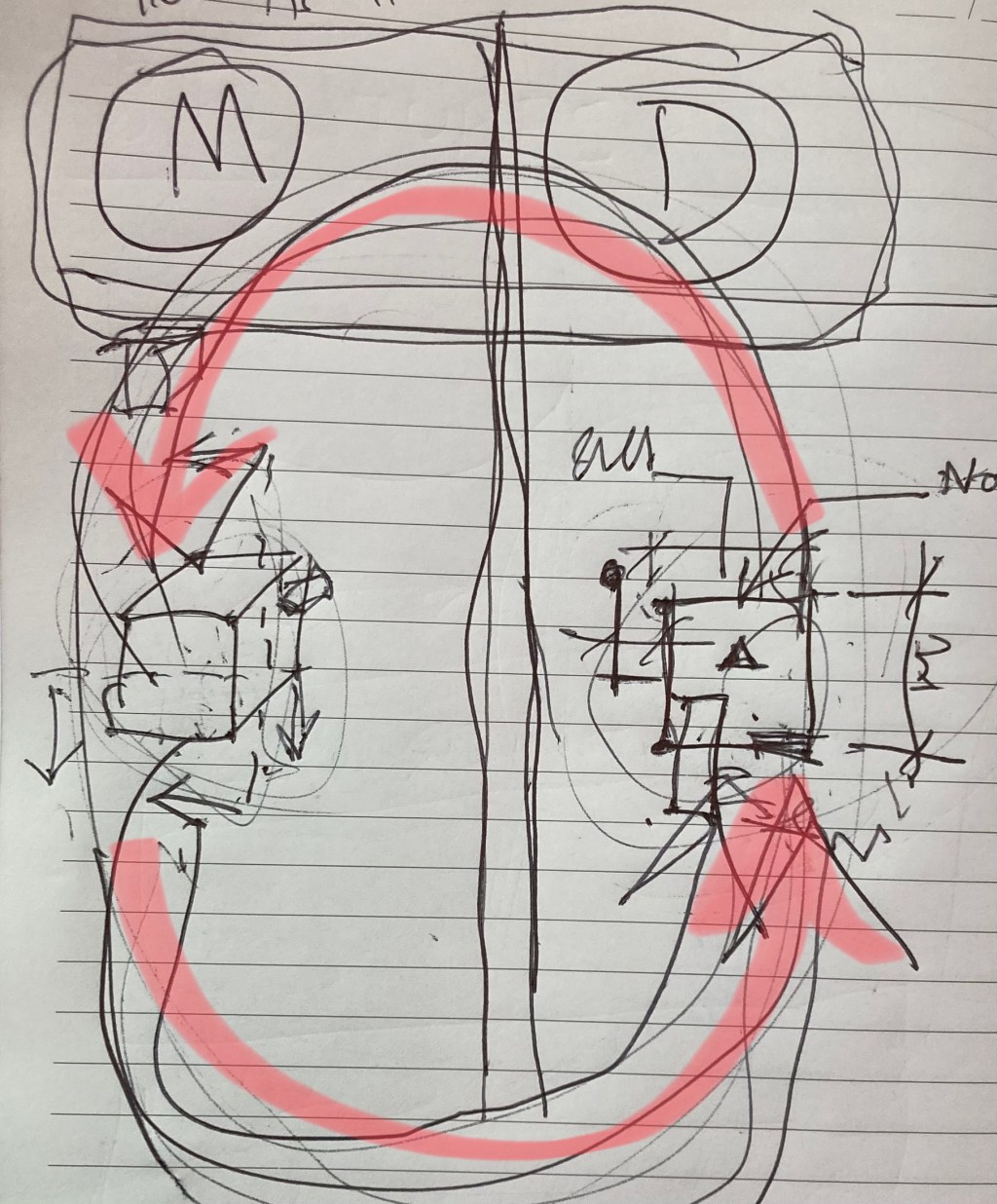

model << >> VCS INTERPLAY

We all do this as mental exercise ONLY, completely unassisted by digital media, as VCS expressions remain still to this day confined to their centuries-old form of expression (technical drawing) only, and externalized from the digital model.

This status quo under-powers the INTERPLAY, which of course under-powers the engine of thought itself, leaving the digital model well and truly under-utilized. A waste of potential if there ever was, a waste of potential.

Adequately addressing this failure will go a long way toward mitigating, and then reversing, the generalized DECAY in the quality of VCS deliverables and the widely reported sloppy inadequacy of models generally that we’ve all seen over the last 20 years. A decay that at the very least –correlates– with the popular uptake of digital modeling tools and work process in AEC over the same time period.

We should make a move:

- the future of technical drawing >>

- the future of visual close study [VCS] equipment IN models >>

- the future of the engine of thought itself >>

- more effective thought >>

- better projects >>

- better outcomes >>

- better life >>

- >> then what?

VCS has a future, and its form of expression will evolve in-situ within digital models of all kinds typically used in AEC (architecture, engineering, and construction) and similar industries. You might propose another form of expression for its future, but whatever form you propose is likely to include the 8 core features I propose here in the PDF outline here:

https://tangerinefocus.com/wp-content/uploads/2024/02/8-features-of-tgn-open-code.pdf

More information here: https://tangerinefocus.com or here: https://tangerinefocus.com/visual-engagement-with-modeled-worlds/

Think for a second about the creation and use of highly elaborated models (mental and digital) of complex projects like buildings (small and large, and very large), bridges, power plants, and so on.

Models of these, mental and digital, don’t come into existence fully formed in an instant. Their development extends over long durations of time, months, years even.

Here’s a simplified encapsulation of some of the important factors in the development of complex mental models and their digital analog (as it were):

We all might reflect on the function of technical drawings, a function inseparable from models (mental and digital). The function is multifold:

1. Technical drawing is an expression of the act of looking somewhere specific within a model. The act of visual close study (VCS) and its articulation.

2. There at that location, we evaluate, is everything that SHOULD be shown HERE actually shown here? Is anything that matters HERE missing?

3. Finally at some point after the long work of model development and review, someone with authority to do so, AFFIRMS the status of the questions in (2).

4. Along the way, an INTERPLAY is engaged between these many expressions of visual close study (VCS), which articulate the act of narrowed visual attentive focus, and the wider expansive environment of the whole of the project model. This interplay is a back and forth continuous dynamic. There is good argument that it -is- the basic observable dynamic of thought itself, that it IS a machine of thinking, an engine of thought.

The idea that one side of the interplay can either be discarded, or stuck in a non-evolving centuries-old form of expression and externalized from the digital model, is simply self-defeating and counterproductive, Maximally.

I draw your attention to item 4 in particular and emphasize it. For 30+ years now of marketing and development of digital modeling software in the AEC market, VCS has been left largely undeveloped.

This undermines the modeling endeavor itself. The engine of thought itself is left underpowered.

This is a very significant omission from software capability, missing for more than 30 years now.

And it really is obvious:

If a company invents the automobile, unhitches the horse, but fails to design, manufacture, and install a motor… and blames others, that’s well and truly analogous to what AEC software corporations have given us.

Now come on. Think about this.

About both model quality, and drawing quality (and again, see this, from the Construction Brothers Podcast) — how many of the worst complaints would be well addressed and improved if software development put some attention again finally on VCS development, as a lens for looking at and evaluating model quality, and as a full half of the 2 cycle machine of thinking that IS the continuous perceptual loop of:

model << >> VCS INTERPLAY

At the site below is my proposal for open source development of VCS equipment for implementation in all AEC modeling (or model-handling) applications whose developers want their users to have it:

Are you interested?

Are you a software developer? Or do you have AEC industry experience? Do you want to help bring the future of VCS and what follows from it, to everyone who wants it?

Yes? Message me on LinkedIn: https://www.linkedin.com/in/robsnyder3333/

Any proposal for VCS development in digital models is consistent with typical commentary today regarding the larger tech field of ‘AI’ and ‘Generative AI’

Some, for sure, take a non-critical view of ‘AI’ and frame it like this:

ignoring or refuting AI at this moment in time is a very brave stance to take… one could say even reckless!

But how about looking at AI thoughtfully, evaluating it rationally?

Of course many do. Here’s an example:

https://garymarcus.substack.com/p/statistics-versus-understanding-the

I like this one that makes a call that’s increasingly typical:

ICYMI, the best papers I have read about GenAI, both by by Chirag Shah and Emily Bender, are titled “Situating Search”, published in 2022, and “Envisioning Information Access Systems: What Makes for Good Tools and a Healthy Web?”, published in 2024. And these could not be more relevant in light of Erik Hoel’s suggested epitaph for the Internet’s headstone: “Here lies the Internet, murdered by generative AI”. Hoel sees LLMs as adulterating content and devouring meaning.

Thankfully, the Shah and Bender papers are wonderful antidotes. They discuss the downside of LLM-based searching and, most importantly, the ideal of information systems as critical public goods.

Three key points:

▶ We should be looking to build tools that help users find and make sense of information rather than tools that purport to do it all for them.

▶ As we seek to imagine and build such systems, we would do well to remember that information systems have often been run as public goods and our current era of corporate control of dominant information systems is the aberration.

▶ LLM-based AI interfaces take away transparency and user agency; they further amplify the problems associated with bias in AI systems; and they often provide ungrounded and/or toxic answers that may go unchecked by a typical user.

So grab a coffee – authors Shah and Bender have done us a great service; one that is especially helpful for librarians, teachers, database vendors, and publishers.

HT Byron King, Amy Thurlow

Chirag Shah and Emily M. Bender,

▶ Situating Search. https://lnkd.in/gnSQJvSa.

▶ Envisioning Information Access Systems: What Makes for Good Tools

and a Healthy Web? https://lnkd.in/gG4btTisErik Hoel

https://www.linkedin.com/posts/activelibrarycuration_icymi-the-best-papers-i-have-read-about-activity-7168406249376600064-Nil_

▶ Here lies the internet, murdered by generative AI. https://lnkd.in/guRC8MN4. The accompanying image is “Art for The Intrinsic Perspective” by Alexander Naughton.

VCS and AI

VCS matters as much as ever if not more, in models made with generative AI.

I foresee AI utility in three situations in which (VCS) visual close study equipment, in digital models of all kinds in AEC, plays a part in AI-assisted modeling and drawing work process:

- Because generative AI generates digital models of AEC facilities IN AN ITERATIVE PROCESS, where a model is generated and then re-generated, again and again as the text prompt is tuned over and over again, VCS (TGN) rigs placed in these models (automatically as in item 1, or manually) for human visual close study of the model…, these VCS rigs are an optimal device within which to develop human-in-the-loop guidance mechanisms that allow the user to say, ‘OK, hold -these- parts of the generative model in -this- position/proportion/orientation/etc. and let the rest of the model re-iterate freely, unconstrained.

- ‘DeepQA’ question and answer against very complex physical asset (infrastructure) digital datasets may possibly be enhanced, giving better question and answer results, i.e., more productive, more useful, conversations with AI about difficult-to-answer hard questions, about very complex systems and huge volumes of data — because VCS (TGN) rigs for human interaction with, and visual close study of, those datasets over the long duration of design, construction, and operation of infrastructure assets MAY reveal data correlations that otherwise would be much harder (or impossible) to make, and, a richer field of correlation is a more fertile field for data mining and ‘AI’: https://tangerinefocus.com/tgn/investigations-in-cognitive-computing/

- The use of AI for both automatic placement of VCS (TGN) rigs at useful places throughout a digital model, and for automatic tuning of TGN settings within each of those rigs according to the logic of where they’re placed in the models.

Regarding point item 1 above, recall the earlier, now 20 years old, version of generative modeling in AEC and its consistency with the general call today for “tools that help users find and make sense of information” and “interfaces that supply transparency and user agency”

20 years worth of computational/generative design should not be forgotten because ‘AI’. They’ve been at it a long time and their modus operandi is the creation and use of apps that allow them to author their own dependency chains and algorithms and with full transparency into what those are made visible through directed graphs, code inspection, and the resultant modeled geometry in a graphics window simultaneously.

Some of us used to think of their work as a mysterious art and the masters of it wizards. Little did we know what was coming. These first generation generative/computational design gurus all look like the most forthright people anybody’s ever seen by comparison.

If you want VCS in your modeling app, message me on LinkedIn: https://www.linkedin.com/in/robsnyder3333/

As some have noticed I’ve spent a long time talking about this and no time DOING it. Yet. Yeah, that’s on target 🎯. I’m a slow learner on business starts. But change is coming unless I fail completely. Who knows?

TGN is for visual close study of digital models. It’s equipment for LOOKING, just as technical drawing, in VCS’s centuries-old conventional form of expression, is for close study of mental models.

https://tangerinefocus.com